Hydro-Quebec NECEC Transmission Line faces Maine PUC scrutiny over clean energy claims, greenhouse gas emissions, spillage capacity, resource shuffling, and Massachusetts contracts, amid opposition from natural gas generators and environmental groups debating public need.

Key Points

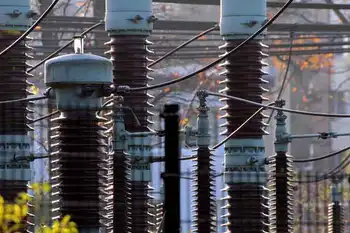

A $1B Maine corridor for Quebec hydropower to Massachusetts, debated over emissions, spillage, and public need.

✅ Maine PUC weighing public need and ratepayer benefits

✅ Emissions impact disputed: resource shuffling vs new supply

✅ Hydro-Quebec spillage claims questioned without data

As Maine regulators are deciding whether to approve construction of a $1 billion electricity corridor across much of western Maine, the Canadian hydroelectric utility poised to make billions of dollars from the project has been absent from the process.

This has left both opponents and supporters of the line arguing about how much available energy the utility has to send through a completed line, and whether that energy will help fulfill the mission of the project: fighting climate change.

And while the utility has avoided making its case before regulators, which requires submitting to cross-examination and discovery, it has engaged in a public relations campaign to try and win support from the region's newspapers.

Government-owned Hydro-Quebec controls dams and reservoirs generating hydroelectricity throughout its namesake province. It recently signed agreements to sell electricity across the proposed line, named the New England Clean Energy Connect, to Massachusetts as part of the state's effort to reduce its dependence on fossil fuels, including natural gas.

At the Maine Public Utilities Commission, attorneys for Central Maine Power Co., which would build and maintain the line, have been sparring with the opposition over the line's potential impact on Maine and its electricity consumers. Leading the opposition is a coalition of natural gas electricity generators that stand to lose business should the line be built, as well as the Natural Resources Council of Maine, an environmental group.

That unusual alliance of environmental and business groups wants Hydro-Quebec to answer questions about its hydroelectric system, which they argue can't deliver the amount of electricity promised to Massachusetts without diverting energy from other regions.

In that scenario, critics say the line would not produce the reduction in greenhouse gas emissions that CMP and Hydro-Quebec have made a central part of their pitch for the project. Instead, other markets currently buying energy from Hydro-Quebec, such as New York, Ontario and New Brunswick, would see hydroelectricity imports decrease and have to rely on other sources of energy, including coal or oil, to make up the difference. If that happened, the total amount of clean energy in the world would remain the same.

Opponents call this possibility "greenwashing." Massachusetts regulators have described these circumstances as "resource shuffling."

But CMP spokesperson John Carroll said that if hydropower was diverted from nearby markets to power Massachusetts, those markets would not turn to fossil fuels. Rather they would seek to develop other forms of renewable energy "leading to further reductions in greenhouse gas emissions in the region."

Hydro-Quebec said it has plenty of capacity to increase its electricity exports to Massachusetts without diverting energy from other places.

However, Hydro-Quebec is not required to participate -- and has not voluntarily participated -- in regulatory hearings where it would be subject to cross examinations and have to testify under oath. Some participants wish it would.

At a January hearing at the Maine Public Utilities Commission, hearing examiner Mitchell Tannenbaum had to warn experts giving testimony to "refrain from commentary regarding whether Hydro-Quebec is here or not" after they complained about its absence when trying to predict potential ramifications of the line.

"I would have hoped they would have been visible and available to answer legitimate questions in all of these states through which their power is going to be flowing," said Dot Kelly, a member of the executive committee at the Maine Chapter of the Sierra Club who has participated in the line's regulatory proceedings as an individual. "If you're going to have a full and fair process, they have to be there."

[What you need to know about the CMP transmission line proposed for Maine]

While Hydro-Quebec has not presented data on its system directly to Maine regulators, it has brought its case to the press. Central to that case is the fact that it's "spilling" water from its reservoirs because it is limited by how much electricity it can export. It said that it could send more water through its turbines and lower reservoir levels, eliminating spillage and creating more energy, if only it had a way to get that energy to market. Hydro-Quebec said the line would make that possible, and, in doing so, help lower emissions and fight climate change.

"We have that excess potential that we need to use. Essentially, it's a good problem to have so long as you can find an export market," Hydro-Quebec spokesperson Serge Abergel told the Bangor Daily News.

Hydro-Quebec made its "spillage" case to the editorial boards of The Boston Globe, The Portland Press Herald and the BDN, winning qualified endorsements from the Globe and Press Herald. (The BDN editorial board has not weighed in on the project).

Opponents have questioned why Hydro-Quebec is willing to present their case to the press but not regulators.

"We need a better answer than 'just trust us,'" Natural Resources Council of Maine attorney Sue Ely said. "What's clear is that CMP and HQ are engaging in a full-court publicity tour peddling false transparency in an attempt to sell their claims of greenhouse gas benefits."

Energy generators aren't typically parties to public utility commission proceedings involving the building of transmission lines, but Maine regulators don't typically evaluate projects that will help customers in another state buy energy generated in a foreign country.

"It's a unique case," said Maine Public Advocate and former Democratic Senate Minority Leader Barry Hobbins, who has neither endorsed nor opposed the project. Hobbins noted the project was not proposed to improve reliability for Maine electricity customers, which is typically the point of new transmission line proposals evaluated by the commission. Instead, the project "is a straight shot to Massachusetts," Hobbins said.

Maine Public Utilities Commission spokesperson Harry Lanphear agreed. "The Commission has never considered this type of project before," he said in an email.

In order to proceed with the project, CMP must convince the Maine Public Utilities Commission that the proposed line would fill a "public need" and benefit Mainers. Among other benefits, CMP said it will help lower electricity costs and create jobs in Maine. A decision is expected in the spring.

Given the uniqueness of the case, even the commission seems unsure about how to apply the vague "public need" standard. On Jan. 14, commission staff asked case participants to weigh in on how it should apply Maine law when evaluating the project, including whether the hydroelectricity that would travel over the line should be considered "renewable" and whether Maine's own carbon reduction goals are relevant to the case.

James Speyer, an energy consultant whose firm was hired by natural gas company and project opponent Calpine to analyze the market impacts of the line, said he has testified before roughly 20 state public utility commissions and has never seen a proceeding like this one.

"I've never been in a case where one of the major beneficiaries of the PUC decision is not in the case, never has filed a report, has never had to provide any data to support its assertions, and never has been subject to cross examination," Speyer said. "Hydro-Quebec is like a black box."

Hydro-Quebec would gladly appear before the Maine Public Utilities Commission, but it has not been invited, said spokesperson Abergel.

"The PUC is doing its own process," Abergel said. "If the PUC were to invite us, we'd gladly intervene. We're very willing to collaborate in that sense."

But that's not how the commission process works. Individuals and organizations can intervene in cases, but the commission does not invite them to the proceedings, commission spokesperson Lanphear said.

CMP spokesperson Carroll dismissed concerns over emissions, noting that Hydro-Quebec is near the end of completing a more than 15-year effort to develop its clean energy resources. "They will have capacity to satisfy the contract with Massachusetts in their reservoirs," Carroll said.

While Maine regulators are evaluating the transmission line, Massachusetts' Department of Public Utilities is deciding whether to approve 20-year contracts between Hydro-Quebec and that state's electric utilities. Those contracts, which Hydro-Quebec has estimated could be worth close to $8 billion, govern how the utility sells electricity over the line.

Dean Murphy, a consultant hired by the Massachusetts Attorney General's office to review the contracts, testified before Massachusetts regulators that the agreements do not require a reduction in global greenhouse gas emissions. Murphy also warned the contracts don't actually require Hydro-Quebec to increase the total amount of energy it sends to New England, as energy could be shuffled from established lines to the proposed CMP line to satisfy the contracts.

Parties in the Massachusetts proceeding are also trying to get more information from Hydro-Quebec. Energy giant NextEra is currently trying to convince Massachusetts regulators to issue a subpoena to force Hydro-Quebec to answer questions about how its exports might change with the construction of the transmission line. Hydro-Quebec and CMP have opposed the motion.

Hydro-Quebec has a reputation for guarding its privacy, according to Hobbins.

"It would have been easier to not have to play Sherlock Holmes and try to guess or try to calculate without having a direct 'yes' or 'no' response from the entity itself," Hobbins said.

Ultimately, the burden of proving that Maine needs the line falls on CMP, which is also responsible for making sure regulators have all the information they need to make a decision on the project, said former Maine Public Utilities Commission Chairman Kurt Adams.

"Central Maine Power should provide the PUC with all the info that it needs," Adams said. "If CMP can't, then one might argue that they haven't met their burden."

'They treat HQ with nothing but distrust'

If completed, the line would bring 9.45 terawatt hours of electricity from Quebec to Massachusetts annually, or about a sixth of the total amount of electricity Massachusetts currently uses every year (and roughly 80 percent of Maine's annual load). CMP's parent company Avangrid would make an estimated $60 million a year from the line, according to financial analysts.

As part of its legally mandated efforts to reduce carbon emissions and fight climate change, Massachusetts would pay the $950 million cost of constructing the line. The state currently relies on natural gas, a fossil fuel, for nearly 70 percent of its electricity, a figure that helps explain natural gas companies' opposition to the project.

A panel of experts recently warned that humanity has 12 years to keep global temperatures from rising above 1.5 degrees Celsius and prevent the worst effects of climate change, which include floods, droughts and extreme heat.

The line could lower New England's annual carbon emissions by as much as 3 million metric tons, an amount roughly equal to Washington D.C.'s annual emissions. Opponents worry that reduction could be mostly offset by increases in other markets.

But while both sides have claimed they are fighting for the environment, much of the debate features giant corporations with headquarters outside of New England fighting over the future of the region's electricity market, echoing customer backlash seen in other utility takeovers.

Hydro-Quebec is owned by the people of Quebec, and CMP is owned by Avangrid, which is in turn owned by Spanish energy giant Iberdrola. Leading the charge against the line are several energy companies in the Fortune 500, including Houston-based Calpine and Florida-based NextEra Energy.

However, only one side of the debate counts environmental groups as part of its coalition, and, curiously enough, that's the side with fossil fuel companies.

Some environmental groups, including the Natural Resources Council of Maine and Environment Maine, have come out against the line, while others, including the Acadia Center and the Conservation Law Foundation, are still deciding whether to support or oppose the project. So far, none have endorsed the line.

"It is discouraging that some of the environmental groups are so opposed, but it seems the best is the enemy of the good," said CMP's Carroll in an email. "They seem to have no sense of urgency; and they treat HQ with nothing but distrust."

Much of the environmentally minded opposition to the project focuses on the impact the line would have on local wildlife and tourism.

Sandi Howard administers the Say NO To NECEC Facebook page and lives in Caratunk, one of the communities along the proposed path of the line. She said opposition to the line might change if it was proven to reduce emissions.

"If it were going to truly reduce global CO2 emissions, I think it would be be a different conversation," Howard said.

Not the first choice

Before Maine, New Hampshire had its own debate over whether it should serve as a conduit between Quebec and Massachusetts. The proposed Northern Pass transmission line would have run the length of the state. It was Massachusetts' first choice to bring Quebec hydropower to its residents.

But New Hampshire's Site Evaluation Committee unanimously voted to reject the Northern Pass project in February 2018 on the grounds that the project's sponsor, Eversource, had failed to prove the project would not interfere with local business and tourism. Though it was the source of the electricity that would have traveled over the line, Hydro-Quebec was not a party to the proceedings.

In its decision, the committee noted the project would not reduce emissions if it was not coupled with a "new source of hydropower" and the power delivered across the line was "diverted from Ontario and New York." The committee added that it was unclear if the power would be new or diverted.

The next month, Massachusetts replaced Northern Pass by selecting CMP's proposed line. As the project came before Maine regulators, questions about Hydro-Quebec and emissions persisted. Two different analyses of CMP's proposed line, including one by the Maine Public Utility Commission's independent consultant, found the line would greatly reduce New England's emissions.

But neither of those studies took into account the line's impact on emissions outside of New England. A study by Calpine's consultant, Energyzt, found New England's emissions reduction could be mostly offset by increased emissions in other areas, including New Brunswick and New York, that would see hydroelectricity imports shrink as energy was redirected to fulfill the contract with Massachusetts.

'They failed in any way to back up those spillage claims'

Hydro-Quebec seemed content to let CMP fight for the project alone before regulators for much of 2018. But at the end of the year, the utility took a more proactive approach, meeting with editorial boards and providing a two-page letter detailing its "spillage" issues to CMP, which entered it into the record at the Maine Public Utilities Commission.

The letter provided figures on the amount of water the utility spilled that could have been converted into sellable energy, if only Hydro-Quebec had a way to get it to market. Instead, by "spilling" the water, the company essentially wasted it.

Instead of sending water through turbines or storing it in reservoirs, hydroelectric operators sometimes discharge water held behind dams down spillways. This can be done for environmental reasons. Other times it is done because the operator has so much water it cannot convert it into electricity or store it, which is usually a seasonal issue: Reservoirs often contain the most water in the spring as temperatures warm and ice melts.

Hydro-Quebec said that, in 2017, it spilled water that could have produced 4.5 terawatt hours of electricity, or slightly more than half the energy needed to fulfill the Massachusetts contracts. In 2018, the letter continued, Hydro-Quebec spilled water that could have been converted into 10.4 terawatts worth of energy. The company said it didn't spill at all due to transmission constraints prior to 2017.

The contracts Hydro-Quebec signed with the Massachusetts utilities are for 9.45 terawatt hours annually for 20 years. In its letter, the utility essentially showed it had only one year of data to show it could cover the terms of the contract with "spilled" energy.

"Reservoir levels have been increasing in the last 15 years. Having reached their maximum levels, spillage maneuvers became necessary in 2017 and 2018," said Hydro-Quebec spokesperson Lynn St. Laurent.

By providing the letter through CMP, Hydro-Quebec did not have to subject its spillage figures to cross examination.

Dr. Shaleen Jain, a civil and environmental engineering professor at the University of Maine, said that, while spilled water could be converted into power generation in some circumstances, spills happen for many different reasons. Knowing whether spillage can be translated into energy requires a great deal of analysis.

"Not all of it can be repurposed or used for hydropower," Jain said.

In December, one of the Maine Public Utility Commission's independent consultants, Gabrielle Roumy, told the commission that there's "no way" to "predict how much water would be spilled each and every year." Roumy, who previously worked for Hydro-Quebec, added that even after seeing the utility's spillage figures, he believed it would need to divert energy from other markets to fulfill its commitment to Massachusetts.

"I think at this point we're still comfortable with our assumptions that, you know, energy would generally be redirected from other markets to NECEC if it were built," Roumy said.

In January, Tanya Bodell, the founder and executive director of consultant Energyzt, testified before the commission on behalf of Calpine that it was impossible to know why Hydro-Quebec was spilling without more data.

"There's a lot of details you'd have to look at in order to properly assess what the reason for the spillage is," Bodell said. "And you have to go into an hourly level because the flows vary across the year, within the month, the week, the days. ...And, frankly, it would have been nice if Hydro-Quebec was here and brought their model and allowed us to see how this could help them to sell more."

Even though CMP and Hydro-Quebec's path to securing approval of the project does not go through the Legislature, and despite a Maine court ruling that energized Hydro-Quebec's export bid, lawmakers have taken notice of Hydro-Quebec's absence. Rep. Seth Berry, D-Bowdoinham, the House chairman of the Joint Committee On Energy Utilities and Technology and a frequent critic of CMP, said he would like to see Hydro-Quebec "show up and subject their proposal to examination and full analysis and public examination by the regulators and the people of Maine."

"They're trying to sell an incredibly lucrative proposal, and they failed in any way to back up those spillage claims with defensible numbers and defensible analysis," Berry said.

Berry was part of a bipartisan group of Maine lawmakers that wrote a letter to Massachusetts regulators last year expressing concerns about the project, which included doubts about whether the line would actually reduce global gas emissions. On Monday, he announced legislation that would direct the state to create an independent entity to buy out CMP from its foreign investors.

'No benefit to remaining quiet'

Hydro-Quebec would like to provide answers, but "there is always a commercially sensitive information concern when we do these things," said spokesperson Abergel.

"There might be stuff we can do, having an independent study that looks at all of this. I'm not worried about the conclusion," Abergel said. "I'm worried about how long it takes."

Instead of asking Hydro-Quebec questions directly, participants in both Maine and Massachusetts regulatory proceedings have had to direct questions for Hydro-Quebec to CMP. That arrangement may be part of Hydro-Quebec's strategy to control its information, said former Maine Public Utilities Commissioner David Littell.

"From a tactical point of view, it may be more beneficial for the evidence to be put through Avangrid and CMP, which actually doesn't have that back-up info, so can't provide it," Littell said.

Getting information about the line from CMP, and its parent company Avangrid, has at times been difficult, opponents say.

In August 2018, the commission's staff warned CMP in a legal filing that it was concerned "about what appears to be a lack of completeness and timeliness by CMP/Avangrid in responding to data requests in this proceeding."

The trouble in getting information from Hydro-Quebec and CMP only creates more questions for Hydro-Quebec, said Jeremy Payne, executive director of the Maine Renewable Energy Association, which opposes the line in favor of Maine-based renewables.

"There's a few questions that should have relatively simple answers. But not answering a couple of those questions creates more questions," Payne said. "Why didn't you intervene in the docket? Why are you not a party to the case? Why won't you respond to these concerns? Why wouldn't you open yourself up to discovery?"

"I don't understand why they won't put it to bed," Payne said. "If you've got the proof to back it up, then there's no benefit to remaining quiet."

Related News