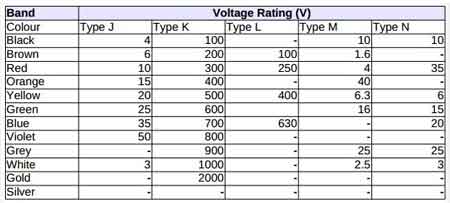

Capacitor Voltage Rating Limitations

Substation Grounding Training

Our customized live online or in‑person group training can be delivered to your staff at your location.

- Live Online

- 12 hours Instructor-led

- Group Training Available

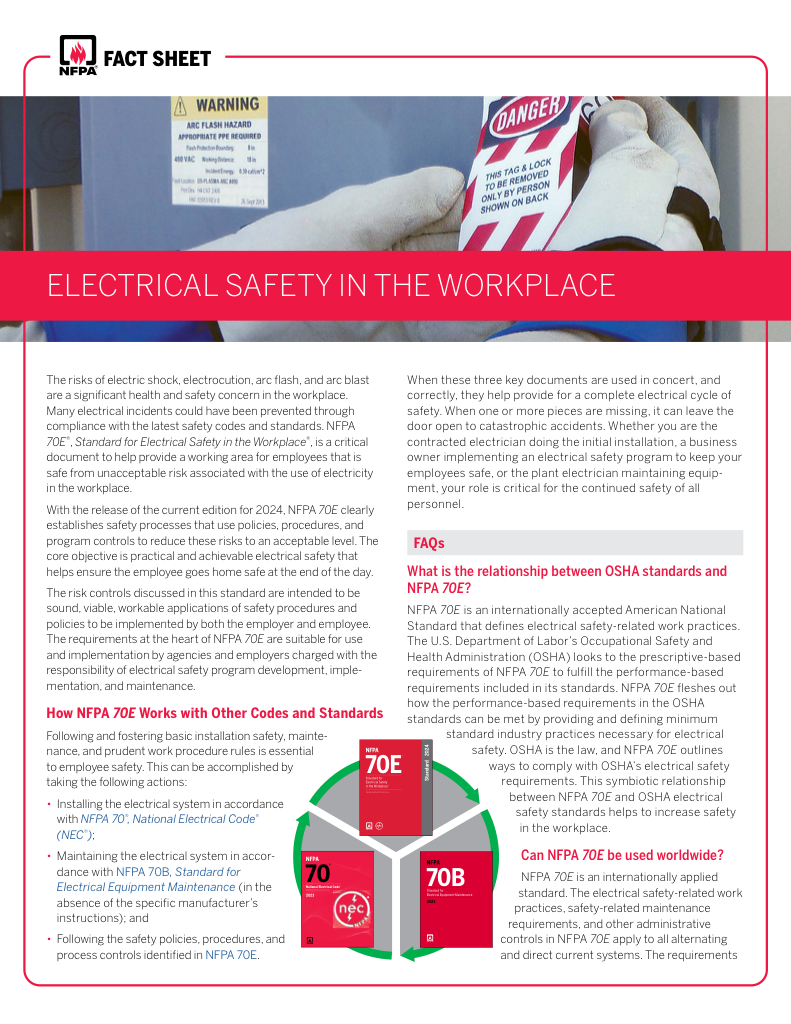

Download Our NFPA 70E Fact Sheet – 2024 Electrical Safety Edition

- Understand how NFPA 70E works with NEC and NFPA 70B standards

- Clarify the shared responsibility between employers and employees

- Learn how NFPA 70E supports OSHA compliance

Capacitor voltage rating indicates the maximum voltage a capacitor can safely handle without failure. Exceeding this limit can cause insulation breakdown or explosion. Always choose a capacitor with a voltage rating above the circuit's operating voltage.

Quick Definition: Capacitor Voltage Rating

Capacitor voltage rating is the highest continuous voltage a capacitor can safely withstand.

✅ Prevents insulation breakdown and overheating

✅ Critical for selecting capacitors in high-voltage circuits

✅ Common ratings include 16V, 25V, 50V, 100V, and higher

When selecting capacitors, it’s critical to ensure that the applied voltage never exceeds the device’s rated limit, especially for sensitive ceramic capacitors. Whether working with DC and AC circuits, the rule of thumb is to choose a capacitor with a voltage rating at least 1.5 to 2 times higher than the maximum applied voltage. This safety margin helps prevent dielectric breakdown, ensures long-term reliability, and accounts for voltage spikes that can occur in real-world operating conditions.

AC vs. DC Voltage Ratings

The capacitor voltage rating is not universal across different current types. A part rated for 100V DC cannot automatically handle 100V AC. This is because AC voltage reverses polarity continuously, thereby stressing the dielectric more than DC voltage. Typically, an AC capacitor voltage rating is about 70% of the corresponding DC value. This becomes especially relevant in power correction systems or motor start circuits.

To understand how voltage behavior affects power delivery, visit apparent power in AC circuits or explore apparent power vs real power.

Dielectric Material and Performance

The insulating material inside determines how well a part can handle voltage and environmental changes. For example:

-

NPO (C0G) offers minimal variation across a wide range of temperatures.

-

X7R types allow for higher capacitance but are more susceptible to fluctuations.

-

Z5U/Y5V materials are cost-effective but highly sensitive to heat and voltage.

Component behavior is not solely based on nameplate ratings; it depends on where and how it’s used. In sensitive circuits like those involving power factor correction, dielectric selection is key. Learn more in our guide to capacitor banks and power factor.

FREE EF Electrical Training Catalog

Download our FREE Electrical Training Catalog and explore a full range of expert-led electrical training courses.

- Live online and in-person courses available

- Real-time instruction with Q&A from industry experts

- Flexible scheduling for your convenience

Frequency and Heat Considerations

As frequency rises, internal heating increases, reducing voltage tolerance. Similarly, high temperatures degrade the dielectric, resulting in lower breakdown voltage and a shorter service life. In high-frequency applications or harsh environments, derating becomes necessary. This means selecting parts rated above the maximum expected voltage.

To better understand how surges affect performance, visit our resource on inrush surge current.

Safety Margins and Proper Derating

It’s standard practice to apply a safety factor—often selecting components rated 1.5 to 2 times above the working voltage. This accounts for unpredictable spikes or long-term wear. Derating boosts reliability, especially in renewable energy and automation systems.

If you're unsure how component values relate to total load, refer to the apparent power formula, or use a calculator for power factor to balance reactive and real components in your design.

Choosing Ceramic Components

Ceramic-based units are common due to their compact size and good frequency performance. However, their voltage tolerance varies with class and construction. For best results, select materials based on the application’s temperature, polarity, and current type. Learn how these devices affect system efficiency in our article on capacitive loads.

Frequently Asked Questions

What does capacitor voltage rating mean and why is it important?

It defines the upper limit of voltage a part can safely handle. Exceeding this limit may lead to overheating or system failure.

How is the correct rating selected?

Multiply the circuit’s maximum voltage by at least 1.5 to build in a margin of safety for spikes or fluctuations.

What happens if capacitor voltage rating is overrated?

Using a rating that is too high does not harm the system, but it may lead to unnecessary costs or oversized components.

Can a higher-rated part be used safely?

Yes—higher ratings are often safer, as long as size and budget constraints are considered.

How does temperature impact voltage limits?

Rising temperatures can reduce tolerance, weakening insulation and lowering long-term reliability.

Selecting the right capacitor voltage rating is vital for ensuring long-term performance and circuit safety. By factoring in AC vs. DC stress, environmental conditions, and material differences, you can build more resilient systems and avoid costly failures.

Related Articles