Electromagnetic Induction

By R.W. Hurst, Editor

Electromagnetic induction is the process of generating electric current by changing a magnetic field, forming the basis for transformers, generators, and electric motors in power generation and energy conversion systems.

What is Electromagnetic Induction?

Electromagnetic induction is a fascinating and vital concept shaping how we generate, transfer, and utilize electricity.

✅ Process where a changing magnetic field induces an electric current in a conductor

✅ Fundamental principle behind transformers, generators, and electric motors

✅ Essential for modern power generation, transmission, and energy conversion

Its underlying principles, Faraday's Law and Lenz's Law, explain the phenomena occurring when magnetic fields interact with conductors. At the same time, its various applications, such as electric generators, transformers, induction heating, and wireless charging, have revolutionized our daily lives. As we continue to explore and understand this intricacy, we can look forward to even more innovations and advancements in electricity and magnetism. Understanding Faraday's Law is essential to grasp how changing magnetic fields generate electricity through electromagnetic induction.

Fundamental Laws of Electromagnetic Induction

Faraday's Law of electromagnetic induction, invented by Michael Faraday, is a key principle that states that the induced EMF in a coil is proportional to the rate of change of the magnetic flux (dφ/dt) passing through the coil. The more rapidly the magnetic field changes, the higher the amount of voltage induced will be. This relationship between electricity and magnetism laid the foundation for the development of various electrical devices and systems. The relationship between electricity and magnetism forms the foundation of generators, transformers, and other key electrical systems.

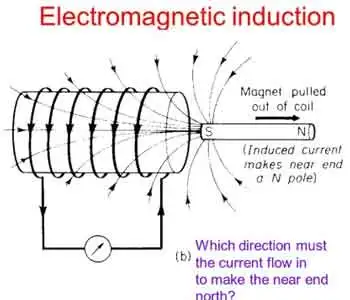

Lenz's Law, another crucial principle, explains the direction of the induced current. It states that the induced current will always flow in a direction that opposes the change in magnetic flux, acting as a stabilizing electromotive force. By combining Faraday's and Lenz's Law, we can better understand how it operates in real-world applications. For a deeper understanding of how voltage is produced in coils, explore the principles of Lenz's Law.

Core Applications: Generators and Transformers

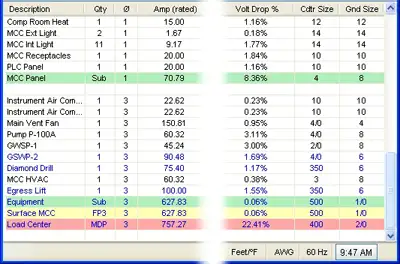

One notable application is the electrical generator, which converts mechanical energy into electrical energy. In a typical generator, a coil of wire rotates within a magnetic field, creating a changing magnetic flux. This change induces a voltage and current in the coil, generating electricity. Generators are commonly used in power plants, automobiles, and other devices requiring a continuous electrical supply. A three-phase transformer uses electromagnetic induction to efficiently transfer power between circuits at different voltages.

Another critical application is the transformer, which transfers electrical energy between circuits with different voltages. Transformers consist of two induction coils wrapped around a shared magnetic core. When an alternating current flows through the primary coil, it creates a magnetic field that induces a voltage in the secondary coil. By adjusting the number of turns in the coils, transformers can step up or down the voltage as needed for specific electrical systems.

Special Phenomena: Eddy Currents, Mutual Inductance, and Self-Inductance

Eddy currents are an intriguing aspect of electromagnetic induction. These currents form when a magnetic field changes, causing swirling, closed loops of electric current within a conductor. Eddy currents generate heat and can lead to energy losses in electrical systems, but they also have practical applications, such as in metal detectors and induction heating. Devices that use electromagnetic induction, such as electric motors, rely on core principles of inductance to regulate energy transfer.

Two critical aspects of electromagnetic induction are mutual inductance and self-inductance. Mutual inductance occurs when the current in one coil induces a voltage in a nearby coil due to their shared magnetic field. Self-inductance refers to a coil's ability to induce a voltage within itself when the current flowing through it changes. Understanding these concepts is crucial for designing and analyzing complex electrical circuits and systems.

Demonstrating the Principle: Coil and Magnet Interaction

In many cases, it involves the interaction of a coil and a magnet, either a permanent magnet or an electromagnet. A bar magnet, for example, may be moved near a coil, or the coil may be moved about the magnet. This motion changes the magnetic field and induces a voltage in the coil, demonstrating the dynamic relationship between electricity and magnetism. Learning the basics of electrical resistance helps explain how eddy currents generate heat in conductive materials.

Modern Impact and Emerging Technologies

It has an immense impact on modern life, from generating electricity in power plants to operating devices like transformers, electric motors, and wireless chargers. The principles of Faraday's Law and Lenz's Law help explain the complex phenomena that occur when magnetic fields interact with conductors. By harnessing these principles, scientists and engineers have created various practical applications that have revolutionized our daily lives.

Electromagnetic induction demonstrates the fundamental relationship between electricity and magnetism and is integral to many aspects of our modern world. Faraday's Law and Lenz's Law underpin this phenomenon, governing the induced voltage and current when magnetic fields change. The applications of electromagnetic induction range from electric generators and transformers to the study of eddy currents, mutual in influence and self-inductance. By understanding these principles, we can continue to innovate and develop new technologies to improve our daily lives and advance our understanding of the world.

Induction Heating

Electromagnetic induction heating is another practical application that relies on electromagnetic induction. This technology utilizes eddy currents to heat conductive materials, such as metals. By placing a conductive material within a rapidly changing magnetic field, eddy currents are generated within the material, producing heat due to their resistance. This heating method has been employed in various industrial processes, including metal hardening, welding, and cooking.

Wireless charging is an emerging technology that utilizes electromagnetic induction to transfer energy between two coils. When an electric current flows through the primary coil, it generates a magnetic field, which induces a voltage in the secondary coil, providing power to the device. This technology has seen increasing adoption in recent years, particularly for charging electric vehicles and smartphones, offering convenience and reduced dependence on cords and cables.

Related Articles

_1497173102.webp)