How Is Electricity Generated?

It is produced by converting various energy sources, such as fossil fuels, nuclear, solar, wind, or hydro, into electrical energy using turbines and generators. These systems harness mechanical or chemical energy and transform it into usable power.

How Is Electricity Generated?

✅ Converts energy sources like coal, gas, wind, or sunlight into power

✅ Uses generators driven by turbines to create electrical current

✅ Supports global power grids and industrial, commercial, and residential use

Understanding Electricity Generation

Electricity generation is the lifeblood of modern civilization, powering homes, industries, hospitals, transportation systems, and digital infrastructure. But behind the flip of a switch lies a vast and complex process that transforms raw energy into electrical power. At its core, electricity is generated by converting various forms of energy—mechanical, thermal, chemical, or radiant—into a flow of electric charge through systems engineered for efficiency and reliability.

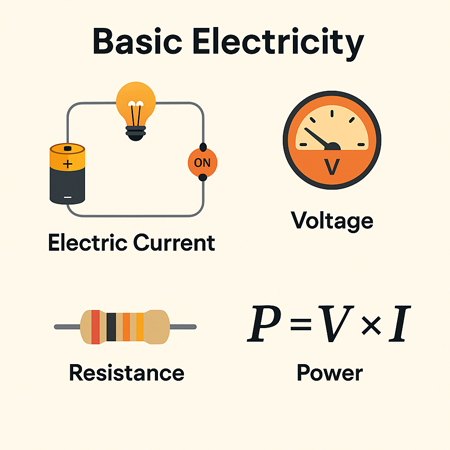

Understanding the role of voltage is essential in this process, as it determines the electrical pressure that drives current through circuits.

According to the Energy Information Administration, the United States relies on a diverse mix of technologies to produce electric power, including fossil fuels, nuclear power, and renewables. In recent years, the rapid growth of solar photovoltaic systems and the widespread deployment of wind turbines have significantly increased the share of clean energy in the national grid. These renewable systems often use turbines to generate electricity by converting natural energy sources—sunlight and wind—into mechanical motion and ultimately electrical power. This transition reflects broader efforts to reduce emissions while meeting rising electric power demand.

How Power Generation Works

Most electricity around the world is produced using turbines and generators. These devices are typically housed in large-scale power plants. The process begins with an energy source—such as fossil fuels, nuclear reactions, or renewable inputs like water, wind, or sunlight—which is used to create movement. This movement, in turn, drives a turbine, which spins a shaft connected to a generator. Inside the generator, magnetic fields rotate around conductive coils, inducing a voltage and producing alternating current (AC) electricity. This method, known as electromagnetic induction, is the fundamental mechanism by which nearly all electric power is made.

In designing and maintaining electrical systems, engineers must also consider voltage drop, which can reduce efficiency and power quality. You can evaluate system losses using our interactive voltage drop calculator, and better understand the math behind it using the voltage drop formula.

Energy Sources Used in Power Production

Steam turbines remain the dominant technology in global energy production. These are especially common in plants that burn coal, natural gas, or biomass, or that rely on nuclear fission. In a typical thermal power plant, water is heated to create high-pressure steam, which spins the turbine blades. In nuclear facilities, this steam is generated by the immense heat released when uranium atoms are split. While highly efficient, these systems face environmental and safety concerns—greenhouse gas emissions from fossil fuels, radioactive waste and accident risk from nuclear power.

Power quality in these plants can be impacted by voltage sag, which occurs when systems experience a temporary drop in electrical pressure, often due to sudden large loads or faults. Managing such variations is crucial to stable output.

The Rise of Renewable Energy in Electricity Generation

Alongside these large-scale thermal technologies, renewable sources have grown significantly. Hydroelectric power harnesses the kinetic energy of falling or flowing water, typically from a dam, to spin turbines. Wind energy captures the movement of air through large blades connected to horizontal-axis turbines. Solar power generates electricity in two distinct ways: photovoltaic cells convert sunlight directly into electric power using semiconductors, while solar thermal plants concentrate sunlight to heat fluids and produce steam. Geothermal systems tap into the Earth’s internal heat to generate steam directly or via heat exchangers.

These renewable systems offer major advantages in terms of sustainability and environmental impact. They produce no direct emissions and rely on natural, often abundant energy flows. However, they also face limitations. Solar and wind power are intermittent, meaning their output fluctuates with weather and time of day. Hydropower and geothermal are geographically constrained, only viable in certain regions. Despite these challenges, renewables now account for a growing share of global electricity generation and play a central role in efforts to decarbonize the energy sector.

In areas where water and electricity coexist—such as hydroelectric plants—understanding the risks associated with water and electricity is critical to ensure operational safety and prevent electrocution hazards.

Generators and Turbines: The Heart of Electricity Generation

Generators themselves are marvels of electromechanical engineering. They convert rotational kinetic energy into electrical energy through a system of magnets and copper windings. Their efficiency, durability, and capacity to synchronize with the grid are critical to a stable electric power supply. In large plants, multiple generators operate in parallel, contributing to a vast, interconnected grid that balances supply and demand in real-time.

Turbines, powered by steam, water, gas, or wind, generate the rotational force needed to drive the generator. Their design and performance have a significant impact on the overall efficiency and output of the plant. Measuring output accurately requires devices like a watthour meter or wattmeters, which are standard tools in generation stations.

Technicians often use formulas such as Watt’s Law to determine power consumption and verify performance. Understanding what ammeters measure also plays a role in monitoring electrical current flowing through generator systems.

Related Articles