Carbon capture CanadaÂ’s best hope to meet Kyoto targets

By Vancouver Sun

Protective Relay Training - Basic

Our customized live online or in‑person group training can be delivered to your staff at your location.

- Live Online

- 12 hours Instructor-led

- Group Training Available

A just-released federal-Alberta committee report says urgent action is required to ensure that Canada starts mopping up after itself on the carbon emissions front.

It notes that carbon capture, or sequestration, could become Canada's ticket to meeting its greenhouse gas reduction targets under the Kyoto Protocol. Specifically, by 2050, carbon capture could address about 40 per cent of Canada's projected emissions.

Realistically, this may be our best hope of meeting targets that will force emissions reductions of between 60 and 70 by mid-century. Canada has promised by 2020 to reduce emissions from current levels by 20 per cent.

Put those reduction promises against the fact that our emissions are up by more than 25 per cent since 1990, and the challenge facing Canadian politicians is apparent.

There's no getting away from the fact that this is a country rich in oil, natural gas and coal resources, all of which spew the stuff that's creating climate havoc around the world.

The oilsands north of Edmonton in particular are problematic in that they've turned Alberta into the pollution champion of Canada. That province alone accounts for a third of the country's greenhouse gas emissions.

The report, nearly a year in the making, asserts that creating carbon capture and storage technology is as vital a national infrastructure project for Canada as was the national railway. As worthy of funding from taxpayers as the Hibernia oil field off Newfoundland, as electricity transmission grids and natural gas and oil pipelines.

It proposes three to five carbon-capture projects by 2015, at a projected cost of $2 billion.

Carbon capture, endorsed by the Intergovernmental Panel on Climate Change, is already in use on a limited basis around the globe.

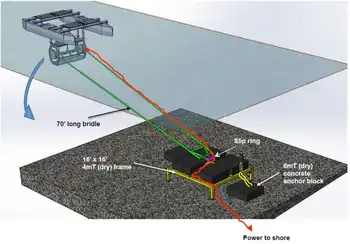

It works by way of trapping carbon dioxide at the point of pollution, compressing it into pipelines and shipping it to disposal sites where it's injected into underground caverns.

The 55-page report, titled Canada's Fossil Energy Future, asserts carbon capture is "a natural fit for Canada," endowed with underground stable sedimentary rock formations ideal for carbon dioxide storage.

It's "an opportunity for the country and its industrial sectors to become world leaders." Sounds good. But of course there's what the report terms "a financial gap."

It goes on to argue that, as a national building initiative, carbon capture technology is the responsibility of all Canadians.

That viewpoint is not surprising since the committee itself was jointly sponsored by the federal and Alberta governments.

But an excellent case can be made that Alberta should pick up the lion's share of the tab to create this tidbit of technology.

After all, Wild Rose Country is in danger of growing out of sync economically with other provinces, developing a fatcat reputation as it continues to be the prime beneficiary of Canada's oil industry as well as the largest contributor among provinces to the greenhouse gas emissions problem.

Alberta is the only jurisdiction in Canada to be debt free and running huge surpluses.

Other provinces, appropriately, don't expect Alberta to pass along any of its oil wealth beyond contributing its share of equalization money.

Carbon capture presents an opportunity for Alberta to create some goodwill for itself across the country by providing funding for an innovation that's badly needed in its own backyard.

Edmonton has been lagging on the goodwill front. Last week, Premier Ed Stelmach up and left a provincial meeting of premiers aimed at discussing greenhouse gas emissions.

At the meeting, four of his fellow premiers pledged to develop a cap-and-trade carbon market scheme to help Canada meet its greenhouse gas reduction targets under Kyoto.

Interestingly, environmental groups are turning thumbs down on national funding for carbon capture. They fear the new technology would provide an outlet that will do nothing to encourage energy conservation.

But there's another issue. As John Bennett, director of climateforchange.ca, has stated, the highly profitable industries doing the polluting should take their share of the responsibility.

"The concept of polluter pay is apparently too complicated for the oil industry."