Niagara Tunnel nearly complete

By Toronto Star

NFPA 70e Training - Arc Flash

Our customized live online or in‑person group training can be delivered to your staff at your location.

- Live Online

- 6 hours Instructor-led

- Group Training Available

Energy minister Brad Duguid calls it a project that will deliver cleaner, greener power to Ontario families.

Critics call it a $985 million project thatÂ’s now coming in at $1.6 billion.

Ontario Power Generation boss Tom Mitchell calls it a “top of the scale” engineering project.

But no one who has seen it calls it a simple hole in the ground.

ItÂ’s a 10.2 kilometre tunnel bored through the Niagara escarpment, carrying water from above Niagara Falls to the Sir Adam Beck generating station in Queenston at a rate of 500 cubic metres a second.

And now, the main tunnel is nearly done.

Big Becky, the 4,000-tonne drilling behemoth that has chewed her way through solid rock since 2006, is 9.5 kilometres from the starting point, less then a kilometre from the finish.

She should break through in April, just above the Falls.

Marko Sobota sat happily at the controls, grinding forward at up to 1.8 metres and hour, driving the 14-metre diameter tunnel ever closer to the goal.

“I’m just a local guy,” says Sobota happily. He was part of the crew that helped assemble Big Becky in 2005, and graduated to learning how to operate the machine.

“It’s great to get a job 10 minutes from home. And to be on a project this huge. It’s unbelievable.”

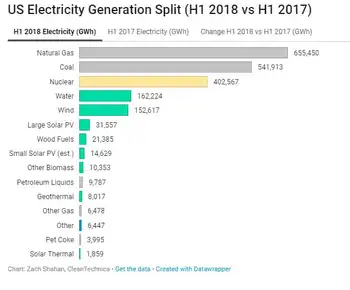

The additional water flowing through the Beck generating station will boost its annual output by 1.6 billion kilowatt hours, up from the current 12 billion.

But itÂ’s a huge job. Boring the tunnel means moving 1.7 million cubic metres of solid rock.

It dips as low as 150 metres below ground, diving under a subterranean gorge invisible from the surface but not made of solid rock and therefore unsuitable for tunneling.

“If anyone tells you doing green energy is easy, bring him down here,” says Mitchell, standing in the cavernous space as Big Becky throbs, rumbles and vibrates with a deafening cacophony.

The project found that out the hard way.

Dipping around the underground obstacle forced the project to re-route the tunnel, driving the cost higher than expected.

Mitchell says it was the right decision in the circumstances. And when power starts to flow as a result of the project, probably in 2013, heÂ’ll have to persuade the Ontario Energy Board that the added cost is justified, in order to build the cost into the rates that Ontario Power charges for electricity.

But thatÂ’s still a couple of years off because thereÂ’s plenty of work to be done on the tunnel, even when Becky finishes her work this spring.

The whole tunnel must be lined with smooth concrete: In effect, Mitchell says it amounts to drilling a hole and then building a pipe inside it.

The more perfectly round the pipe, and the smoother its walls, the more energy is transmitted to the turbines in the generating station.

But in the run-up to this fallÂ’s provincial election, with the Conservatives sniping at the Liberals for high-energy bills, engineering and technology sometimes take a back seat to politics.

Touring the tunnel with reporters, Duguid takes time out to slam Conservative leader Tim Hudak and New Democratic Party leader Andrea Horwath for showing insufficient enthusiasm for the LiberalsÂ’ green, and often pricey, energy projects.

Hudak, says Duguid, is “trying to hoodwink Ontarians to think we can build that cleaner, modern energy system for free.”

In October, voters get to decide whether heÂ’s right.