US Climate Alliance 100% Renewables 2035 accelerates clean energy, electrification, and decarbonization, replacing coal and gas with wind, solar, and storage to cut air pollution, lower energy bills, create jobs, and advance environmental justice.

Key Points

A state-level target for alliance members to meet all electricity demand with renewable energy by 2035.

✅ 100% RES can meet rising demand from electrification

✅ Major health gains from reduced SO2, NOx, and particulates

✅ Jobs grow, energy burdens fall, climate resilience improves

The Union of Concerned Scientists joined with COPAL (Minnesota), GreenRoots (Massachusetts), and the Michigan Environmental Justice Coalition, to better understand the feasibility and implications of leadership states meeting 100 percent of their electricity needs with renewable energy by 2035, a target reflected in federal clean electricity goals under discussion today.

We focused on 24 member states of the United States Climate Alliance, a bipartisan coalition of governors committed to the goals of the 2015 Paris Climate Agreement. We analyzed two main scenarios: business as usual versus 100 percent renewable electricity standards, in line with many state clean energy targets now in place.

Our analysis shows that:

Climate Alliance states can meet 100 percent of their electricity consumption with renewable energy by 2035, as independent assessments of zero-emissions feasibility suggest. This holds true even with strong increases in demand due to the electrification of transportation and heating.

A transition to renewables yields strong benefits in terms of health, climate, economies, and energy affordability.

To ensure an equitable transition, states should broaden access to clean energy technologies and decision making to include environmental justice and fossil fuel-dependent communitieswhile directly phasing out coal and gas plants.

Demands for climate action surround us. Every day brings news of devastating "this is not normal" extreme weather: record-breaking heat waves, precipitation, flooding, wildfires. To build resilience and mitigate the worst impacts of the climate crisis requires immediate action to reduce heat-trapping emissions and transition to renewable energy, including practical decarbonization strategies adopted by states.

On the Road to 100 Percent Renewables explores actions at one critical level: how leadership states can address climate change by reducing heat-trapping emissions in key sectors of the economy as well as by considering the impacts of our energy choices. A collaboration of the Union of Concerned Scientists and local environmental justice groups COPAL (Minnesota), GreenRoots (Massachusetts), and the Michigan Environmental Justice Coalition, with contributions from the national Initiative for Energy Justice, assessed the potential to accelerate the use of renewable energy dramatically through state-level renewable electricity standards (RESs), major drivers of clean energy in recent decades. In addition, the partners worked with Greenlink Analytics, an energy research organization, to assess how RESs most directly affect people's lives, such as changes in public health, jobs, and energy bills for households.

Focusing on 24 members of the United States Climate Alliance (USCA), the study assesses the implications of meeting 100 percent of electricity consumption in these states, including examples like Rhode Island's 100% by 2030 plan that inform policy design, with renewable energy in the near term. The alliance is a bipartisan coalition of governors committed to reducing heat-trapping emissions consistent with the goals of the 2015 Paris climate agreement.[1]

On the Road to 100 Percent Renewables looks at three types of results from a transition to 100 percent RES policies: improvements in public health from decreasing the use of coal and gas2 power plants; net job creation from switching to more labor-oriented clean energy; and reduced household energy bills from using cleaner sources of energy. The study assumes a strong push to electrify transportation and heating to address harmful emissions from the current use of fossil fuels in these sectors. Our core policy scenario does not focus on electricity generation itself, nor does it mandate retiring coal, gas, and nuclear power plants or assess new policies to drive renewable energy in non-USCA states.

Our analysis shows that:

USCA states can meet 100 percent of their electricity consumption with renewable energy by 2035 even with strong increases in demand due to electrifying transportation and heating.

A transition to renewables yields strong benefits in terms of health, climate, economies, and energy affordability.

Renewable electricity standards must be paired with policies that address not only electricity consumption but also electricity generation, including modern grid infrastructure upgrades that enable higher renewable shares, both to transition away from fossil fuels more quickly and to ensure an equitable transition in which all communities experience the benefits of a clean energy economy.

Currently, the states in this analysis meet their electricity needs with differing mixes of electricity sourcesfossil fuels, nuclear, and renewables. Yet across the states, the study shows significant declines in fossil fuel use from transitioning to clean electricity; the use of solar and wind powerthe dominant renewablesgrows substantially:

In the study's "No New Policy" scenario"business as usual"coal and gas generation stay largely at current levels over the next two decades. Electricity generation from wind and solar grows due to both current policies and lowest costs.

In a "100% RES" scenario, each USCA state puts in place a 100 percent renewable electricity standard. Gas generation falls, although some continues for export to non-USCA states. Coal generation essentially disappears by 2040. Wind and solar generation combined grow to seven times current levels, and three times as much as in the No New Policy scenario.

A focus on meeting in-state electricity consumption in the 100% RES scenario yields important outcomes. Reductions in electricity from coal and gas plants in the USCA states reduce power plant pollution, including emissions of sulfur dioxide and nitrogen oxides. By 2040, this leads to 6,000 to 13,000 fewer premature deaths than in the No New Policy scenario, as well as 140,000 fewer cases of asthma exacerbation and 700,000 fewer lost workdays. The value of the additional public health benefits in the USCA states totals almost $280 billion over the two decades. In a more detailed analysis of three USCA statesMassachusetts, Michigan, and Minnesotathe 100% RES scenario leads to almost 200,000 more added jobs in building and installing new electric generation capacity than the No New Policy scenario.

The 100% RES scenario also reduces average energy burdens, the portion of household income spent on energy. Even considering household costs solely for electricity and gas, energy burdens in the 100% RES scenario are at or below those in the No New Policy scenario in each USCA state in most or all years. The average energy burden across those states declines from 3.7 percent of income in 2020 to 3.0 percent in 2040 in the 100% RES scenario, compared with 3.3 percent in 2040 in the No New Policy scenario.

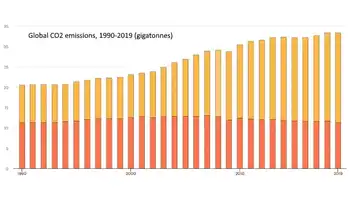

Decreasing the use of fossil fuels through increasing the use of renewables and accelerating electrification reduces emissions of carbon dioxide (CO2), with implications for climate, public health, and economies. Annual CO2 emissions from power plants in USCA states decrease 58 percent from 2020 to 2040 in the 100% RES scenario compared with 12 percent in the No New Policy scenario.

The study also reveals gaps to be filled beyond eliminating fossil fuel pollution from communities, such as the persistence of gas generation to sell power to neighboring states, reflecting barriers to a fully renewable grid that policy must address. Further, it stresses the importance of policies targeting just and equitable outcomes in the move to renewable energy.

Moving away from fossil fuels in communities most affected by harmful air pollution should be a top priority in comprehensive energy policies. Many communities continue to bear far too large a share of the negative impacts from decades of siting the infrastructure for the nation's fossil fuel power sector in or near marginalized neighborhoods. This pattern will likely persist if the issue is not acknowledged and addressed. State policies should mandate a priority on reducing emissions in communities overburdened by pollution and avoiding investments inconsistent with the need to remove heat-trapping emissions and air pollution at an accelerated rate. And communities must be centrally involved in decisionmaking around any policies and rules that affect them directly, including proposals to change electricity generation, both to retire fossil fuel plants and to build the renewable energy infrastructure.

Key recommendations in On the Road to 100 Percent Renewables address moving away from fossil fuels, increasing investment in renewable energy, and reducing CO2 emissions. They aim to ensure that communities most affected by a history of environmental racism and pollution share in the benefits of the transition: cleaner air, equitable access to good-paying jobs and entrepreneurship alternatives, affordable energy, and the resilience that renewable energy, electrification, energy efficiency, and energy storage can provide. While many communities can benefit from the transition, strong justice and equity policies will avoid perpetuating inequities in the electricity system. State support to historically underserved communities for investing in solar, energy efficiency, energy storage, and electrification will encourage local investment, community wealth-building, and the resilience benefits the transition to renewable energy can provide.

A national clean electricity standard and strong pollution standards should complement state action to drive swift decarbonization and pollution reduction across the United States. Even so, states are well positioned to simultaneously address climate change and decades of inequities in the power system. While it does not substitute for much-needed national and international leadership, strong state action is crucial to achieving an equitable clean energy future.

Related News