Latest T&D Test Equipment Articles

Hipot Testing Explained

Hipot testing checks electrical insulation strength by applying high voltage to confirm there are no current leaks. It is essential for ensuring safety, detecting faults, and preventing electrical failures in power equipment, cables, transformers, and other high-voltage systems.

What is Hipot Testing?

Hipot testing is a critical procedure for evaluating the dielectric strength and insulation integrity of electrical systems.

✅ Detects insulation breakdowns and leakage currents.

✅ Ensures equipment safety and compliance with standards.

✅ Prevents electrical hazards in high-voltage systems.

Also known as dielectric withstand, hipot testing applies high voltage to confirm that insulation can endure operating and surge conditions without breaking down. This test is crucial across various industries, including medical devices, automotive electronics, and aerospace systems, where insulation failure can pose life-threatening hazards. Companies that fail to conduct a proper dielectric strength test risk product recalls, lawsuits, and reputational damage, while effective analysis ensures reliability and safety.

A comprehensive dielectric strength evaluation is essential to ensure electrical insulation can withstand high voltages without failure. During hipot evaluations, engineers closely monitor the leakage current limit to detect any early signs of insulation breakdown. Complementary procedures like the insulation resistance inspection verify the long-term reliability of insulating materials, while partial discharge measurement helps identify microscopic defects that may develop into serious faults under stress. Additionally, performing a ground bond measurement ensures that all conductive paths can safely carry fault currents, completing a robust safety and quality assurance process.

Hipot Testing and Dielectric Withstand Test

Hipot testing, also known as a dielectric withstand test, is a method used to verify the electrical insulation strength of a device or component. The term “dielectric” refers to an insulating material’s ability to resist the flow of electric current, while “withstand” indicates the material’s capacity to endure high voltage without breaking down. During this test, a voltage much higher than the device’s normal operating level is applied between the conductive parts and the insulation. The goal is to ensure that the insulation can safely block current flow, preventing electrical shock, equipment damage, or catastrophic failures.

In practice, the dielectric withstand test checks for:

-

Leakage current: Measuring if any unwanted current flows through insulation when exposed to high voltage.

-

Breakdown point: Identifying the voltage level at which insulation fails or starts conducting.

-

Safety margins: Confirming the insulation can withstand voltage spikes far above normal operating conditions.

This test is essential in industries like medical, automotive, and aerospace, where insulation integrity directly impacts safety and reliability.

The Importance of Hipot Testing

Hipot testing is a cornerstone of electrical safety, particularly in industries where failure is not an option. In medical devices, patient safety is paramount—any breakdown in insulation resistance could lead to electrical shock or malfunctions during critical procedures. The same applies to automotive and aerospace systems, which must perform reliably under extreme conditions. High-voltage tests conducted during production ensure that every device meets rigorous safety standards before it reaches the field. Neglecting this step can result in product recalls, lawsuits, or catastrophic failures, both financially and ethically.

There are three primary hypot test methods:

-

AC test applies alternating current, which can expose insulation weaknesses that occur under dynamic voltage conditions.

-

DC test uses direct current and is effective for identifying leakage currents and insulation resistance over time.

-

Impulse test simulates sudden, transient voltage spikes to assess how components withstand extreme surges.

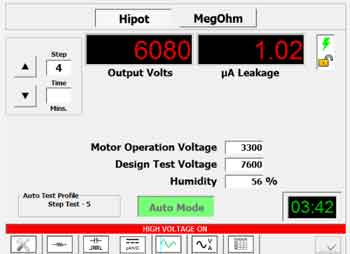

The examination process consists of three stages: preparation, examination, and analysis. It starts with ground bond tests to verify that the grounding path can safely handle fault currents. Once verified, the device under test is connected to a hipot tester, and the required voltage is applied for a set duration. During this period, leakage current is closely monitored, and any readings above acceptable limits indicate insulation failure or degradation.

Modern automated hipot testers have transformed the process, enabling the simultaneous examination of multiple devices, reducing human error, and accelerating production lines. These systems can store data for analysis, enabling trend monitoring and preventive maintenance. Equally important is the regular calibration and maintenance of the test equipment itself, as inaccurate or poorly maintained testers can lead to false pass/fail results, compromising both product quality and safety.

Hipot Test Types – Comparison Table

| Test Type | Description | Applications | Advantages/Limitations |

|---|---|---|---|

| AC Hipot Test | Applies alternating current at high voltage to the DUT | Common for transformers, cables, and general devices | Detects weaknesses under dynamic voltage but can stress capacitive loads |

| DC Hipot Test | Uses direct current to check insulation integrity | Ideal for capacitive loads like cables and motors | Easier leakage measurement but may not reveal AC-related weaknesses |

| Impulse Test | Simulates transient high-voltage spikes (surges) | Aerospace, automotive, and lightning surge test | Closest to real surge conditions but highly specialized |

Voltage Levels and Test Duration

Determining the correct test voltage and duration is crucial for accurate dielectric withstand.

Standard Formula:

Test Voltage ≈ 2 × Operating Voltage + 1,000 V

For instance, a product rated at 480 V would typically be tested at:

(2 × 480) + 1,000 = 1,960 V.

Typical test durations:

-

Design tests (prototype/certification): ~1 minute for thorough insulation verification.

-

Production tests (quality checks): ~1 second for efficient, large-scale assessment while maintaining safety.

These parameters ensure that insulation can endure unexpected surges without damaging the device or compromising user safety.

Safety Considerations and Leakage Current Thresholds

Hipot testing involves high voltages, making the safety of both operators and equipment a top priority. Test setups must minimize the risk of electric shock or equipment damage.

Key safety practices:

-

Grounding design: Properly ground all exposed conductive parts to divert fault current.

-

Current limits: Keep total current limits as low as possible, typically between 0.1 mA and 20 mA, depending on the standard.

-

Safe fixtures: Use enclosures, interlocks, and warning indicators to prevent accidental contact.

-

Tester maintenance: Regular calibration ensures accurate readings and avoids false results.

In addition to dielectric strength tests, engineers often conduct insulation resistance tests and ground bond tests to confirm the existence of safe conductive paths. For high-voltage applications, partial discharge measurement is used to detect microscopic insulation defects that standard hipot testing may not reveal.

Interpreting Hipot Test Results

Interpreting the results of a high-potential test requires technical insight, as even minor deviations can have significant implications. A common challenge is understanding the measurements of leakage current. While some leakage is acceptable, excessive current indicates insulation breakdown, which can compromise electrical safety. Accurate analysis of these results requires experience and technical expertise. Production lines often use statistical process control (SPC) to monitor these results over time, ensuring consistency and identifying trends that may signal a developing problem. A clear interpretation of these test reports enables timely corrective action, minimizing disruptions to the production line.

Standards and Regulatory Compliance in Hipot Testing

Hipot testing must align with global standards to guarantee product safety and certification.

Key standards include:

-

IEC 60950: High-voltage evaluation for IT and communication equipment.

-

IEC 61010: Safety protocols for industrial, laboratory, and control equipment.

-

UL and CSA: North American standards that require a dielectric strength test for certification.

-

Partial discharge test: Used alongside hipot tests in power systems and transformer industries for detecting latent insulation weaknesses.

Adhering to these standards ensures compliance with legal requirements, enhances market acceptance, and protects manufacturers from liability.

The Future of Hipot Testing: Automation, AI & Predictive Maintenance

Emerging technologies are transforming dielectric strength test (hipot testing), making it more efficient, accurate, and safe. Automated test systems now reduce human error, streamline production line integration, and provide real-time data analytics for improved fault detection. The next wave of innovation includes integrating AI and machine learning to analyze large test datasets and predict insulation failures before they occur, enabling predictive maintenance and continuous quality assurance. Remote measurement capabilities—such as PLC-controlled testers and even drone-equipped systems—are expanding access to examination in hazardous or remote environments while minimizing operator exposure to high voltage. Companies adopting these modern approaches stay ahead of evolving safety mandates and deliver reliable, future-ready inspection solutions.

Frequently Asked Questions

What is the meaning of hipot?

Hipot, short for “high potential,” is a high-voltage safety test that verifies the insulation strength of electrical devices, ensuring they can withstand surges and prevent leakage current.

How is a hipot test done?

A device under test is connected to a tester that applies AC, DC, or impulse voltage between conductive parts and insulation. Leakage current is measured, and any excessive value indicates a failure.

What is a hipot failure?

A failure occurs when insulation cannot withstand the applied voltage, resulting in high leakage current or breakdown, which could lead to electrical shocks or equipment malfunctions.

Is the hipot test AC or DC?

Hipot testing can be performed with either AC or DC voltage. AC tests reveal weaknesses under fluctuating conditions, while DC tests are preferred for stable, capacitive loads.

Related Articles

Sign Up for Electricity Forum’s T&D Test Equipment Newsletter

Stay informed with our FREE T&D Test Equipment Newsletter — get the latest news, breakthrough technologies, and expert insights, delivered straight to your inbox.

Line Leakage Testing: Is It Right For Your Application

Line leakage testing evaluates unintended current paths under normal and single-fault conditions, ensuring electrical safety, touch current compliance, insulation integrity, and adherence to IEC 60990, IEC 60601, UL standards for equipment and medical devices.

What Is Line Leakage Testing?

A test measuring unintended AC current to chassis or earth under normal and fault conditions for safety.

✅ Measures touch current via IEC 60990/60601 test networks

✅ Verifies protective earth and insulation integrity

✅ Ensures compliance under normal and single-fault conditions

The Line Leakage test (LLT for short) is most often specified to be performed as a type test in a design or engineering laboratory or as a routine production line test on medical devices right before they ship. Not as commonly performed as a Dielectric Withstand or Ground Bond test in a production environment, LLT can cause some confusion for engineers and technicians alike.

However, technological advancements have begun to lessen the outward complexity of the test. For a practical overview of modern platforms, the electrical safety testers directory highlights integrated features used to streamline LLT procedures in production.

What used to be complicated set-ups and testing procedures has now been simplified with the use of multi-function testing instruments, all-in-one testing solutions equipped with the components and relay switching networks to perform leakage tests in an automated sequence with little or no input from the test operator. This makes for safer and more efficient testing.

Integrating such instruments into a broader quality program is easier when the electrical testing best practices are mapped to standardized workflows and record-keeping.

Electrical products including anything from appliances to handheld tools must be tested during the design and development phase in order to receive a safety agency listing. In these laboratory environments, the LLT is used to help ensure that the product’s manufacturing processes and assembly practices are satisfactory. Along with other common tests such as the Hipot and Ground Bond tests, the Line Leakage test is used in this situation primarily as an indicator of design quality. Design teams often cross-reference the dielectric voltage withstand test parameters to set conservative leakage screening limits during prototyping.

Some products, such as medical equipment, are designed with the intention of direct contact with a patient. Line Leakage tests should be performed on these products as a 100% routine production line test. Due to the sensitive nature of the applications for which this type of equipment is used, it is easy to see why rigorous testing must be performed as a routine test.

To establish safe baselines before patient-connected trials, a dedicated insulation resistance tester can verify insulation integrity under expected environmental conditions.

Whether the LLT is being performed as a type test or as a production test, the purpose of the test is the same: to determine if a product’s insulation has the integrity to prevent any current from reaching the operator. When current does find its way through or across any part of a product’s insulation system, it is known as leakage.

There are several methods manufacturers employ to prevent leakage, such as utilizing reinforced or double insulation systems and providing sufficient spacing between current-carrying conductors. Despite these measures, leakage current will be present in every product to some degree. Indeed, the electrical relationships between the very materials used in a product’s construction are what account for a substantial portion of any resulting leakage. While the resistance of the insulation will account for some leakage, using an insulating material in between conductors creates a certain amount of distributed capacitance which helps to facilitate leakage to ground. This current will look to travel through a product’s insulation system and return to ground by any means available, whether that is through a safety earth ground connection, or through an operator who is at ground potential.

For example, medical devices that run off of line power have components that are in direct contact with a patient. In this case a wall outlet, an almost unlimited power source, has a direct connection to a patient who could already be sick or frail. It is of vital importance in this scenario that the leakage current produced by the product be small enough so as not to be perceived by the individual to whom the device is connected. More importantly, the insulation of the device needs to have the integrity to prevent any current from reaching the patient.

A CLOSER LOOK AT THE LINE LEAKAGE TEST

The Dielectric Withstand (Hipot) test or Insulation Resistance (IR) test are common safety tests performed in both production line and lab environments. These tests do a good job of determining whether a product is manufactured correctly with good insulation, but they don’t tell us how much leakage current could be flowing through a product while it is running. Furthermore, these tests cannot tell us how that leakage current might change under different conditions. There is no way to determine what might happen if the product is connected to a power source incorrectly, if the operator plugs the product into an outlet that is wired incorrectly, or if the neutral side of the line opens up. The Line Leakage test was developed as a way of determining how leakage current would act conditions such as these. For context on how withstand testing complements LLT, the Hipot test overview outlines relationships between applied voltage levels and resulting leakage behavior during operation.

The Line Leakage test is actually a general term that is used to describe a series of tests. There are 4 different types of LLTs: Earth Leakage test, Enclosure Leakage test, Patient Leakage Current test, and Patient Auxiliary Current test. Each test is performed under nominal operating conditions as well as in a variety of fault conditions. These fault conditions provide us with valuable information about how a product’s leakage current will behave if operated incorrectly.

MEASURING DEVICES

Since the LLT is designed to measure the leakage current of a product while it is running, the way in which the current is measured is of vital importance. Therefore, Line Leakage tests incorporate measuring devices (MDs), which simulate the impedance of the human body. The placement of the measuring device is the factor that distinguishes one type of LLT from another. Measuring devices are specified by safety agencies depending on product classification and the standard to which the product is being tested. For the most part, MDs are resistive and capacitive networks designed to simulate the impedance of the human body in certain conditions. In most of today’s testers, these measuring devices are incorporated in the circuitry of the tester.

As a comparative benchmark to LLT readings, the electrical insulation resistance test helps quantify material condition and supports trending of insulation health over time.

FAULT CONDITIONS

The LLT is performed in both normal and single fault operating conditions. Measuring leakage current during fault conditions is important in order to determine if a product fails “safely”, if it fails at all. A product that fails safely will not produce excessive leakage current.

TEST TYPES

EARTH LEAKAGE TEST:

The Earth Leakage test places the MD from the earth ground pin of the DUT to the neutral side of the line (which is referenced to ground). Before running this check, confirming protective earth integrity with a ground tester ensures the reference path is valid for accurate measurements.

ENCLOSURE LEAKAGE TEST:

The Enclosure Leakage test places the MD from one or more points on a DUT’s chassis to the neutral side of the line.

PATIENT AUXILIARY LEAKAGE TEST:

The Patient Auxiliary Leakage test places the MD in between 2 different applied parts that come into contact with apatient’s body.

CONCLUSION

Although the Line Leakage test can seem somewhat confusing at times, it is an important test that should be given due consideration in any electrical safety testing routine. Technological advancements have made LLT much easier to perform and more manufacturers are performing LLT in both a lab and production environment. With some research and the right equipment, Line Leakage testing can be performed as quickly and easily as more common tests such as a Hipot or Ground Bond test. This means that the LLT can be added to a safety testing routine, without negatively impacting throughput and the addition of the LLT to any safety testing routine will help make electrical products safer and more reliable. This will save money in the long run.

From: Electrical Maintenance Handbook Vol. 10 - The Electricity Forum

Related Articles

Dielectric Voltage Testing - Standard Methods

Dielectric voltage testing verifies insulation strength via hipot, withstand voltage, and leakage current limits, ensuring electrical safety, IEC/IEEE compliance, and quality assurance across power systems, switchgear, transformers, cables, and consumer electronics.

What Is Dielectric Voltage Testing?

A high-potential (hipot) test that verifies insulation strength and leakage limits to ensure safety compliance.

✅ Measures withstand voltage and insulation breakdown margins

✅ Limits leakage current per IEC, IEEE, and UL safety standards

✅ Applied during production, FAT, commissioning, and field service

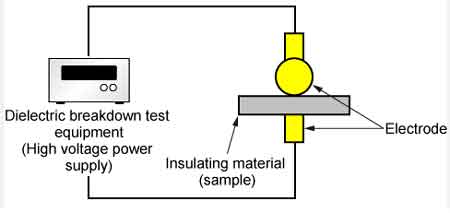

There are two standard methods from ASTM International: D877, Standard Test Method for Dielectric Breakdown Voltage of Insulating Liquids Using Disk Electrodes, and D1816, Standard Test Method for Dielectric Breakdown Voltage of Insulating Oils of Petroleum Origin Using VDE Electrodes.

VDE stands for Verband Deutscher Electrotechniker—the Association for Electrical, Electronic, and Information Technologies— a German standards organization similar in function to the Institute of Electrical and Electronics Engineers (IEEE) in the United States. The D1816 method uses electrodes specified to conform to the design of a VDE standard, Specification 0370. The third standard method is IEC Standard 60156, Insulating liquids—Determination of the breakdown voltage at power frequency— Test method. Standard 60156, published by the nonprofit, non-governmental international standards organization, the International Electrotechnical Commission (IEC), uses electrodes that are similar geometrically to the VDE electrodes. Understanding how electrode geometry interacts with field uniformity aligns with the fundamental dielectric characteristics that govern liquid insulation behavior in practice. For additional context on test outcomes, industry guidance explains how breakdown voltage trends correlate with equipment reliability metrics.

In all three test methods, the electrodes are mounted in a test cell filled with insulating liquid to ensure that the electrodes are completely covered. The test cell is then installed in a dielectric breakdown voltage apparatus so that the electrodes can be charged with an appropriate alternating current (AC) voltage. The AC voltage is increased until breakdown occurs, signified by the operation of automatic circuit interruption equipment included in the dielectric voltage breakdown apparatus. The methods vary with regard to the geometry of the electrodes themselves, as noted above, and the gap spacing of the electrodes and rate of rise for the applied AC voltage. A D1816 test cell will be equipped with a two bladed impeller and shaft to provide agitation of the test specimen. A 60156 test cell may also be equipped with a similar impeller, but it is optional for this method. The same apparatus concept underpins the dielectric voltage withstand test used for verifying insulation integrity under elevated stress levels. Careful handling of the specimen reflects best practices for dielectric fluid management to minimize contamination and measurement scatter.

ASTM D877 is an older method. The electrodes resemble flat coins, and are spaced 0.1 inches (2.54 mm) apart. The rate of AC voltage rise across the electrodes during the determination of dielectric breakdown voltage by the D877 method is 3,000 volts-per-second. These parameters ultimately reflect the basic definition of breakdown voltage of oil as the minimum field intensity that triggers conduction.

Electrodes for the D1816 and IEC 60156 methods are similar to each other, resembling mushroom caps, each being a segment of a 25 mm (millimeter) radius-sphere with the edges rounded to a radius of four millimeters. In the D1816 method, there are two gaps settings that may be used, either one millimeter (0.039 inches) or two millimeters (0.079 inches). For a D1816 determination, the rate of rise for the AC voltage is 500 volts-per-second. For a dielectric breakdown voltage using the IEC method from standard 60156, the spherical electrodes are spaced 2.5 mm apart, and the rate of voltage increase is 2,000 volts per second. Dielectric breakdown voltage determinations identify the presence of contaminants in the oil. These include contamination by moisture, particles and oil aging and oxidation by-products. Although distinct from breakdown testing, insulation resistance measurements complement these methods by trending bulk moisture ingress over time. In service, results are often interpreted alongside dissolved gas analysis to differentiate aging mechanisms and pinpoint emerging faults.

From: Electrical Testing and Maintenance Handbook, Vol 11, The Electricity Forum

Related Articles

Insulation Resistance Explained

Insulation resistance indicates how effectively insulation prevents current leakage, thereby supporting electrical safety and reliability. Testing measures dielectric strength, detects moisture and aging, and guides maintenance to avoid faults while extending equipment life.

What is Insulation Resistance?

Insulation resistance measures the opposition that insulating materials provide to electrical current leakage, ensuring safety, reliability, and efficiency in electrical systems.

✅ Prevents electrical shock and equipment damage

✅ Detects deterioration from moisture, heat, or aging

✅ Ensures compliance with testing standards and codes

The Insulation Resistance (IR) test, often referred to as the Megger test, is over 100 years old and is considered a straightforward test. During my 15 years of inspection and testing work in Canada, the US, and internationally, I have observed various practices for performing and interpreting IR tests. Understanding dielectric voltage testing is essential when evaluating insulation resistance because it helps verify insulation strength under applied electrical stress.

The application of the IR test includes:

-

At the time of installation, pre-commissioning is performed to ensure minimum specifications are met

-

Verify proper installation after a repair

-

Troubleshooting and

-

Periodic preventive maintenance

The value of insulation resistance depends on the equipment type and voltage rating, and is very sensitive to temperature. The results of the IR test typically fall within the range of 10 MΩ to 100 GΩ or higher. The acceptable level of IR depends on the equipment type, voltage class, and age of the equipment. Manufacturers usually provide the minimum recommended IR for the new equipment. In the absence of such data, IEEE 43-2013 and ANSI/NETA ATS-2013 guides could be referenced. Insulation resistance testing often works hand in hand with a dielectric voltage withstand test to confirm equipment reliability and safety standards in the field.

The insulation resistance test voltage is typically between 500V and 10kV, and the test is 1 min. Negative polarity is preferred to accommodate the phenomenon of electroendosmosis. Due to safety concerns related to high voltage, restricting workers' access to high voltages is mandatory. An arc flash suite is not necessary, but the use of personal protective equipment is recommended, as is the use of hot sticks, insulated ladders, and a ground stick for discharge. Accurate insulation resistance measurements depend on proper grounding, which can be supported through the use of a ground tester to eliminate false leakage currents.

There are several insulation resistance test methods:

-

1-min insulation resistance test. The reading is compared to the minimum insulation resistance specification.

-

Yearly insulation resistance test. A flat yearly trend indicates insulation is healthy.

-

Dielectric Absorption Ratio (DAR): the ratio of a 60-second reading divided by a 30-second reading.

-

Polarization Index (PI): the ratio is a 10-minute reading divided by a 1-minute reading

The surface leakage current is dependent on external contamination on the surface, such as dust and moisture. The surface leakage current may be significantly high for bushings, terminations, insulation surfaces, and large generators or motors that are contaminated. To eliminate surface leakage current, which reduces the accuracy of the insulation resistance measurement, a third terminal, known as the guard terminal, is used. The guard terminal shunts the measurement circuit, bypassing the leakage current. For more advanced validation of insulation resistance, utilities may also apply a hipot test to confirm dielectric integrity in high-voltage systems.

The temperature of the test object has a significant impact on the test results. The test results must be corrected to 20°C using ANSI/NETA ATS-2013 or corrected to 40°C using IEEE 43-2013.

Insulation resistance test using the guard probe ( blue wire) at the cable shield for better reading

The following are ten recommendations that could be considered to improve the test results:

1. Negative polarity is preferred to accommodate the phenomenon of electroendosmosis.

2. Record the temperature and use the compensation factor to correct results to 20 0C for cables, transformers, and insulators and to 40 0C for motors and generators.

3. If it is humid or the equipment surface is not clean, use the “Guard Probe” to bypass the leakage current and for better accuracy.

4. If an underground cable is tested, compensate for the cable length as per ANSI/NETA ATS-2013.

5. For new equipment, if there is no recommendation from the manufacturer, use ANSI/NETA ATS-2013 or IEEE 43-2013.

6. For service-aged equipment, if there is no recommendation from the manufacturer, compare the phases to similar-age equipment.

7. Set up the leads at a distance from one another and without contact with any objects or with the floor to limit the possibility of leakage currents within the measurement line itself.

8. After the test is complete, make sure that the circuit is discharged. It can be discharged by short-circuiting the test set terminals and connecting them to ground.

9. Use the polarization index (PI) or dielectric absorption ratio (DAR) only when the insulation resistance is questionable. Otherwise, PI and DAR will be misleading.

10. When you buy an insulation resistance tester, make sure it is 10kV and can measure 10 Tera-ohm or higher.

To fully grasp the role of insulation resistance, see our insulation resistance explained guide, which connects related methods like line leakage testing and calibrate test equipment for accurate results.

Related Articles

Why Calibrate Test Equipment

Calibrate test equipment to ensure accuracy, traceability, and compliance in electrical labs using ISO 17025 procedures, NIST standards, and metrology best practices for multimeters, oscilloscopes, power analyzers, and signal generators.

What Does It Mean to Calibrate Test Equipment?

Adjusting and verifying instruments against traceable standards to ensure accurate, compliant electrical measurements.

✅ Compare readings to NIST-traceable standards; adjust to within tolerance.

✅ Document calibration data, measurement uncertainty, and traceability.

✅ Schedule intervals per ISO 17025; label instruments with calibration status.

You’re serious about your electrical test instruments. You buy top brands, and you expect them to be accurate. You know some people send their digital instruments to a metrology lab for calibration, and you wonder why. After all, these are all electronic — there’s no meter movement to go out of balance. What do those calibration folks do, anyhow — just change the battery? These are valid concerns, especially since you can’t use your instrument while it’s out for calibration. But, let’s consider some other valid concerns. For example, what if an event rendered your instrument less accurate, or maybe even unsafe? What if you are working with tight tolerances, and accurate measurement is key to proper operation of expensive processes or safety systems? What if you are trending data for maintenance purposes, and two meters used for the same measurement significantly disagree? While digital meters avoid mechanical drift, understanding the behavior and limitations of legacy analog multimeters can clarify why calibration still matters in mixed toolkits today.

WHAT IS CALIBRATION?

Many people do a field comparison check of two meters, and call them “calibrated” if they give the same reading. This isn’t calibration. It’s simply a field check. It can show you if there’s a problem, but it can’t show you which meter is right. If both meters are out of calibration by the same amount and in the same direction, it won’t show you anything. Nor will it show you any trending — you won’t know your instrument is headed for an “out of cal” condition. For readers new to instrument types, a concise overview of what a multimeter is provides context for why comparison checks alone cannot verify accuracy.

For an effective calibration, the calibration standard must be more accurate than the instrument under test. Most of us have a microwave oven or other appliance that displays the time in hours and minutes. Most of us live in places where we change the clocks at least twice a year, plus again after a power outage. When you set the time on that appliance, what do you use as your reference timepiece? Do you use a clock that displays seconds? You probably set the time on the “digitschallenged” appliance when the reference clock is at the “top” of a minute (e.g., zero seconds). A metrology lab follows the same philosophy. They see how closely your “whole minutes” track the correct number of seconds. And they do this at multiple points on the measurement scales. Applying disciplined reference techniques goes hand in hand with knowing how to use a digital multimeter correctly during setup and verification steps.

Calibration typically requires a standard that has at least 10 times the accuracy of the instrument under test. Otherwise, you are calibrating within overlapping tolerances and the tolerances of your standard render an “in cal” instrument “out of cal” or vice-versa. Let’s look at how that works.

Two instruments, A and B, measure 100V within 1%. At 480V, both are within tolerance. At 100V input, A reads 99.1V and B reads 100.9V. But if you use B as your standard, A will appear to be out of tolerance. However, if B is accurate to 0.1%, then the most B will read at 100V is 100.1V. Now if you compare A to B, A is in tolerance. You can also see that A is at the low end of the tolerance range. Modifying A to bring that reading up will presumably keep A from giving a false reading as it experiences normal drift between calibrations. These examples hinge on basic voltage measurement concepts that a dedicated voltmeter embodies, reinforcing the need for clear specifications and traceability.

Calibration, in its purest sense, is the comparison of an instrument to a known standard. Proper calibration involves use of a NIST-traceable standard — one that has paperwork showing it compares correctly to a chain of standards going back to a master standard maintained by the National Institute of Standards and Technology. In many facilities, calibration is one pillar of broader electrical testing programs that support compliance, uptime, and safety objectives.

In practice, calibration includes correction. Usually when you send an instrument for calibration, you authorize repair to bring the instrument back into calibration if it was “out of cal”. You’ll get a report showing how far out of calibration the instrument was before, and how far out it is after. In the minutes and seconds scenario, you’d find the calibration error required a correction to keep the device “dead on”, but the error was well within the tolerances required for the measurements you made since the last calibration.

If the report shows gross calibration errors, you may need to go back to the work you did with that instrument and take new measurements until no errors are evident. You would start with the latest measurements and work your way toward the earliest ones. In nuclear safety-related work, you would have to redo all the measurements made since the previous calibration.

After any widespread discrepancy, it may also be prudent to confirm grounding system integrity with a purpose-built ground tester to rule out site issues that could affect measurements.

CAUSES OF CALIBRATION PROBLEMS

What knocks a digital instrument “out of cal”? First, the major components of test instruments (e.g., voltage references, input dividers, current shunts) can simply shift over time. This shifting is minor and usually harmless if you keep a good calibration schedule, and this shifting is typically what calibration finds and corrects.

But, suppose you drop a current clamp — hard. How do you know that clamp will accurately measure, now? You don’t. It may well have gross calibration errors. Similarly, exposing a DMM to an overload can throw it off. Some people think this has little effect, because the inputs are fused or breaker-protected. But, those protection devices may not trip on a transient. Also, a large enough voltage input can jump across the input protection device entirely. This is far less likely with higher quality DMMs, which is one reason they are more cost-effective than the less expensive imports. Following such events, performing insulation-resistance checks with a suitable megohmmeter can reveal latent damage before the next calibration cycle.

CALIBRATION FREQUENCY

The question isn’t whether to calibrate — we can see that’s a given. The question is when to calibrate. There is no “one size fits all” answer. Consider these calibration frequencies:

- Manufacturer-recommended calibration interval. Manufacturers’ specifications will indicate how often to calibrate their tools, but critical measurements may require different intervals.

- Before a major critical measuring project. Suppose you are taking a plant down for testing that requires highly accurate measurements. Decide which instruments you will use for that testing. Send them out for calibration, then “lock them down” in storage so they are unused before that test.

- After a major critical measuring project. If you reserved calibrated test instruments for a particular testing operation, send that same equipment for calibration after the testing. When the calibration results come back, you will know whether you can consider that testing complete and reliable.

- After an event. If your instrument took a hit — something knocked out the internal overload or the unit absorbed a particularly sharp impact — send it out for calibration and have the safety integrity checked, as well.

- Per requirements. Some measurement jobs require calibrated, certified test equipment — regardless of the project size. Note that this requirement may not be explicitly stated but simply expected — review the specs before the test.

- Monthly, quarterly, or semiannually. If you do mostly critical measurements and do them often, a shorter time span between calibrations means less chance of questionable test results.

- Annually. If you do a mix of critical and non-critical measurements, annual calibration tends to strike the right balance between prudence and cost.

- Biannually. If you seldom do critical measurements and don’t expose your meter to an event, calibration at long frequencies can be cost-effective.

- Never. If your work requires just gross voltage checks (e.g., “Yep, that’s 480V”), calibration seems like overkill. But what if your instrument is exposed to an event? Calibration allows you to use the instrument with confidence.

From: Electrical Maintentance Handbook, Vol 10, The Electricity Forum

Related Articles

Dielectric Voltage Withstand Test Explained

The Dielectric Voltage Withstand Test applies high voltage to verify insulation strength. It ensures transformers, wiring, and switchgear resist breakdown, helping prevent electrical hazards and confirming compliance with essential safety standards.

What is a Dielectric Voltage Withstand Test?

The Dielectric Voltage Withstand Test is a high-voltage insulation test that verifies electrical safety and ensures reliable system performance.

✅ Confirms insulation integrity in equipment

✅ Prevents breakdown under overvoltage conditions

✅ Ensures compliance with safety standards

The Dielectric Voltage Withstand Test is a critical safety procedure that every industrial electrician should be familiar with. This procedure, also known as a hipot test or high potential test, evaluates the effectiveness of electrical insulation in preventing potentially dangerous voltage breakdowns. By understanding the principles and applications of this procedure, industrial electricians can ensure the safety of electrical systems and prevent hazards such as electric shock, short circuits, and equipment damage. This article provides a comprehensive overview, covering its importance, procedures, and key factors to consider. Read on to gain valuable knowledge that will enhance your understanding of electrical safety and best practices.

To prevent dangerous dielectric breakdown in electrical systems, the dielectric withstand test is a required procedure for many applications. It assesses the integrity of insulating materials under high voltage stress, helping to ensure electrical safety and prevent equipment failures. Engineers often use dielectric voltage testing to confirm compliance with safety standards and prevent dangerous breakdowns in electrical equipment.

Dielectric Voltage Withstand Test Parameters and Comparisons

| Parameter | Description | Importance |

|---|---|---|

| Test Voltage | High voltage applied above normal operating levels | Confirms insulation can endure electrical stress without failure |

| Duration | Time the voltage is applied (typically 1–60 seconds) | Ensures insulation integrity under sustained conditions |

| Leakage Current | Small current flowing through insulation during the test | Rising leakage indicates deterioration or contamination |

| Breakdown Voltage | Voltage level at which insulation fails and current flows uncontrollably | Key factor in selecting suitable insulating materials |

| Pass/Fail Criteria | Device passes if leakage current remains below safety limit | Defines compliance with IEC, UL, and ISO standards |

| Complementary Tests | Insulation Resistance Test, Line Leakage Test | Provide additional evaluation of insulation health and performance |

| Test Equipment | Hipot testers, insulation resistance testers, ground testers | Accuracy and calibration critical for valid, reliable results |

Insulation Resistance

The Dielectric Voltage Withstand Test is often complemented by the Insulation Resistance Test. While the former focuses on assessing the insulation's ability to withstand high voltages, the Insulation Resistance Test measures the insulation's resistance to current flow at a lower voltage. Together, these procedures provide a comprehensive evaluation of the insulation's integrity, ensuring its ability to prevent electrical leakage and maintain safe operation. The insulation resistance explained page illustrates how this lower-voltage test complements withstand tests to provide a comprehensive view of insulation integrity.

Hipot Test

The term "Hipot Test" is a common abbreviation for "High Potential Test" and is another name for the Dielectric Voltage Withstand Test. This procedure involves applying a high voltage to the insulation for a specific duration to assess its ability to withstand electrical stress. By exceeding the normal operating voltage, the Hipot Test can reveal hidden defects or weaknesses that might not be apparent during regular use. A reliable hipot tester is essential for applying controlled voltages and measuring leakage current during withstand tests.

Breakdown Voltage

A key parameter in insulation testing is the breakdown voltage. This refers to the voltage level at which the insulating material fails, allowing current to flow through it. Exceeding the breakdown voltage can lead to a rapid increase in current, generating excessive heat and potentially causing a catastrophic failure. Understanding the breakdown voltage of different insulating materials is crucial for selecting appropriate insulation for specific applications and ensuring safe operating conditions.

Safety Standards

Various safety standards and regulations govern its application. Organizations like the IEC, UL, and ISO provide guidelines and specifications for procedures, voltage levels, and durations. Adhering to these standards ensures consistent and reliable practices, promoting electrical safety and preventing potential hazards.

Electrical Safety

The primary purpose is to ensure the electrical safety of devices and components. Identifying insulation weaknesses helps prevent electric shock, short circuits, and other electrical hazards that could pose risks to users or damage equipment. Prioritizing electrical safety through rigorous testing is essential for maintaining a safe operating environment and preventing accidents.

Leakage Current

During the Dielectric Voltage Withstand Test, a small amount of current, known as leakage current, may flow through the insulation. Monitoring this leakage current is crucial as it can indicate the condition of the insulation. An increase in leakage current can signal deterioration or contamination, potentially leading to insulation failure. By setting appropriate limits for leakage current, manufacturers can ensure that insulation meets the required safety standards. Complementary procedures, such as line leakage testing, provide additional insights into insulation health and electrical safety.

Test Equipment

Specialized equipment, such as Hipot testers or insulation resistance testers, is used to conduct the Dielectric Voltage Withstand Test. These devices apply controlled high voltages and measure the resulting leakage current. The accuracy and reliability of the equipment are essential for obtaining valid results and ensuring the effectiveness of the process. A dependable ground tester ensures that grounding systems work correctly, which is critical for accurate and safe dielectric testing.

Applications of Dielectric Voltage Withstand Test

It finds wide applications across various industries and products. From consumer electronics and household appliances to industrial equipment and medical devices, it is crucial to verify the safety and reliability of electrical insulation. Ensuring the integrity of insulation in these diverse applications helps prevent electrical hazards and maintain product performance.

Quality Control

Incorporating the test into quality control procedures is essential for manufacturers of electrical products. By performing this procedure during the production process, manufacturers can identify and reject faulty units before they reach the market. This proactive approach to quality control helps maintain high standards, reduces the risk of product failures, and enhances customer satisfaction. To maintain accuracy, it is vital to calibrate test equipment regularly, ensuring all high-voltage insulation tests deliver valid and reliable results.

Failure Analysis

Despite rigorous testing and quality control measures, insulation failures can still occur. When such incidents happen, a thorough failure analysis is conducted to determine the root cause. This analysis may involve examining the failed insulation, analyzing environmental factors, and reviewing historical data. The insights gained from failure analysis contribute to the improvement of insulation design, manufacturing processes, and maintenance practices, ultimately enhancing the reliability and safety of electrical products.

Frequently Asked Questions

What is the purpose of the Dielectric Voltage Withstand Test?

It aims to evaluate the effectiveness of a device's insulation by applying a high voltage. This helps determine whether the insulation can withstand electrical stress during normal operation, thereby preventing electric shock, short circuits, and product malfunctions.

How is the Dielectric Voltage Withstand Test performed?

A specialized "hipot tester" applies a controlled high voltage to the device and monitors the leakage current. The voltage and duration are predetermined based on safety standards. If the leakage current remains below a predefined limit, the device passes.

What is the difference between the Dielectric Voltage Withstand Test and the Insulation Resistance Test?

It applies a high voltage to check for insulation breakdown, while the Insulation Resistance Test measures resistance to current flow at a lower voltage. They complement each other to provide a complete evaluation of insulation health.

What are the acceptable results or pass/fail criteria?

The device passes if the leakage current during high-voltage application remains below a predefined limit, determined by factors such as operating voltage, insulation material, and intended application.

The Dielectric Voltage Withstand Test is a crucial procedure for ensuring electrical safety and preventing insulation failures in various applications. By applying a high voltage, this test assesses the insulation's ability to withstand electrical stress, helping to prevent hazards such as electric shock and short circuits. Complementary tests, such as the Insulation Resistance Test, provide a comprehensive evaluation of insulation health. Understanding key parameters such as breakdown voltage and leakage current is essential for interpreting test results and ensuring compliance with safety standards. By incorporating it into quality control processes and conducting thorough failure analysis, manufacturers can enhance the reliability and safety of electrical products.

Related Articles

Ground Tester Section Criteria

Ground tester ensures accurate earth resistance, grounding integrity, and soil resistivity measurements using clamp-on, 3-point/4-point methods, plus loop impedance testing, boosting IEC/NEC safety compliance for electrical installations, bonding continuity, and earthing fault protection.

What Is a Ground Tester?

A ground tester measures earth resistance and verifies grounding integrity for safe, code-compliant electrical systems.

✅ Measures earth resistance, soil resistivity, and ground loop impedance

✅ Supports 3-point/4-point, clamp-on, and stake-less test methods

✅ Ensures NEC/IEC compliance, bonding continuity, and fault protection

Ground Tester Section Criteria is important to decide. The electrical grounding component of an electrical facility can be easily overlooked. It doesn’t appear to have an active role. It isn’t moving, doesn’t emit light or sound, or provide data. It’s largely out of sight. But the electrical ground is in fact dynamic. It gets challenged and stressed like any other part of the electrical system. It can deteriorate and lose effectiveness. It should be checked, tested, and maintained, just as visible, active equipment must be. As a starting point, understanding the basics outlined in what electrical grounding is helps frame why ongoing verification matters.

Factors that affect the Ground Tester Section Criteria include weather, corrosion, catastrophic events, soil properties, and the electrical plant itself. Weathering, especially the pressures caused by freezing and thawing, can break apart joints and welds and physically deteriorate a ground. Corrosion, promoted by electrical conductivity through moisture and dissolved salts in the soil, can eat away a grounding structure until virtually nothing remains below the surface. The massive energy of lightning strikes can cause the grounding structure to be sacrificed even while it is effectively diverting the strike from the electrical system. The basic condition of the soil itself can undergo long-term changes that may adversely affect electrical grounding capability. Surrounding industrial and residential expansion can lower the water table, placing a once-favorable ground in drier, less conductive soil. For context, the construction and placement of grounding electrodes influence how these environmental factors translate into real resistance changes.

And not to be overlooked are changes in the electrical demands of the facility itself. As sophisticated, sensitive electronic equipment is installed during the process of modernization, the original grounding requirement may no longer be adequate. The narrow voltage “windows” of computer and process control operations demand the maximum in ground efficiency if they are to function properly without noise interference. An older physical plant that was designed before electronics will most likely not meet this demand. Many facilities benchmark upgrades against grounding and bonding requirements to ensure compatibility with sensitive loads.

Evaluating the Ground Tester Section Criteria of a grounding electrode (the term includes rods, grids, ground beds, counterpoise systems, and similar variations) begins with the proper selection of a test instrument. The truly critical aspect of ground tester selection isn’t so much how to make the selection as it is simply to choose a ground tester in the first place! That is to say, the most common error is in the selection of a unit other than a ground tester to perform a ground tester’s function. Selecting purpose-built tools within the broader category of electrical testing ensures procedures align with proven measurement practices.

As the goal of evaluating Ground Tester Section Criteria is to measure ground resistance, it is reasoned, by a common application of what might be considered faulty logic, that any ohmmeter will do. Generic multimeters, VOMs, and insulation testers with continuity ranges are all frequently used. The consequences are lost time and the risk of incorrect or unreliable readings. Time spent traveling to a job site can be wasted when it is discovered that the test instrument is not capable of performing a required test or conforming to a mandated procedure. And measurements taken with a generic ohmmeter are subject to uncertainties that reduce their reliability to a matter of chance. Relying on general-purpose electrical safety testers can compound these errors because their design priorities do not isolate ground impedance variables.

Generic ohmmeters are used to test ground electrodes by means of a method that is commonly referred to as a “Two- Point” or “Dead Earth” test. One lead of the tester is connected to the ground electrode being tested, and the other to a reference ground. The latter is most often the water-pipe system, but may also be a separately driven rod, metal fence post, or any convenient metallic connection to the soil. The resultant measurement is the series resistance of the entire loop, and its accuracy is contingent upon the reference ground (plus test leads) being of negligible resistance. The intervening soil is assumed to be contributing nearly all of the reading. The “two points” mentioned are the points of connection with the earth (electrode and reference ground), as distinguished from a Fall of Potential (three point) test and Wenner Method (four-point test). The term “dead earth” refers to the reference ground not being part of the electrical system. When verification of an existing facility is the objective, technicians sometimes begin with simple checks like how to confirm an area is grounded before escalating to formal resistance measurements.

There are three major limitations to this method:

- The assumption that the reference is of negligible resistance.

- The spacing of the contact points is arbitrary.

- Interference from voltage transients in the soil.

The IEEE, in its standard for ground resistance testing(#81) observes of the two-point method: “…This method is subject to large errors for low-valued driven grounds but is very useful and adequate where a ‘go, no-go’ type of test is all that is required.”

Use of a specialized ground tester readily overcomes these problems. Four-wire bridge measurement and dedicated test probes isolate the resistance of the ground under test as the only component of the reading. Separation of current and potential test circuits enables the operator to position the test probes outside of the electrical sphere of the tested ground, while movement of the potential probe in accordance with established procedures verifies that adequate spacing has been achieved. And the separate current circuit enables the use of an AC test signal that can be measured at frequencies distinct from those of any interfering transients. The use of a specific, dedicated ground tester enables the operator to control and monitor the conditions of the test in a way that no other type of instrumentation affords. The outcome of the test is reliable, repeatable, and free of chance. Test reports can be composed to assure clients that the measurement was made in accordance with a recognized, dependable method. For complex installations, budgeting and scope planning often consider ground grid testing cost factors so that compliance testing is scheduled and resourced appropriately.

From: Power Quality & Electrical Grounding Handbook, Vol. 6 - The Electricity Forum