Capacitance Explained

Capacitance: Understanding the Ability to Store Electricity

Capacitance is an essential concept in electrical circuits, and it describes the ability of a capacitor to store electrical energy. Capacitors are electronic components used in many circuits to perform various functions, such as filtering, timing, and power conversion. Capacitance is a measure of a capacitor's ability to store electrical energy, and it plays a crucial role in the design and operation of electrical circuits. This article provides an overview of capacitance, including its definition, SI unit, and the difference between capacitor and capacitance.

What is Capacitance?

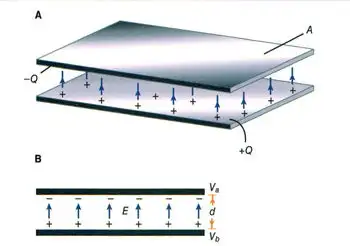

Capacitance is the ability of a capacitor to store electrical charge. A capacitor consists of two conductive plates separated by a dielectric material. The conductive plates are connected to an electrical circuit, and the dielectric material is placed between them to prevent direct contact. When a voltage source is applied to the plates, electrical charge builds up on the surface of the plates. The amount of charge that a capacitor can store is determined by its capacitance, which depends on the size and distance between the plates, as well as the dielectric constant of the material.

The energy storing capability of a capacitor is based on its capacitance. This means that a capacitor with a higher capacitance can store more energy than a capacitor with a lower capacitance. The energy stored in a capacitor is given by the formula:

Energy (Joules) = 0.5 x Capacitance (Farads) x Voltage^2

The ability to store energy is essential for many applications, including filtering, timing, and power conversion. Capacitors are commonly used in DC circuits to smooth out voltage fluctuations and prevent noise. They are also used in AC circuits to filter out high-frequency signals.

What is Capacitance and the SI Unit of Capacitance?

Capacitance is defined as the ratio of the electrical charge stored on a capacitor to the voltage applied to it. The SI unit of capacitance is the Farad (F), which is defined as the amount of capacitance that stores one coulomb of electrical charge when a voltage of one volt is applied. One Farad is a relatively large unit of capacitance, and most capacitors have values that are much smaller. Therefore, capacitors are often measured in microfarads (µF) or picofarads (pF).

The capacitance of a capacitor depends on several factors, including the distance between the plates, the surface area of the plates, and the dielectric constant of the material between the plates. The dielectric constant is a measure of the ability of the material to store electrical energy, and it affects the capacitance of the capacitor. The higher the dielectric constant of the material, the higher the capacitance of the capacitor.

What is the Difference Between Capacitor and Capacitance?

Capacitor and capacitance are related concepts but are not the same thing. Capacitance is the ability of a capacitor to store electrical energy, while a capacitor is an electronic component that stores electrical charge. A capacitor consists of two conductive plates separated by a dielectric material, and it is designed to store electrical charge. Capacitance is a property of a capacitor, and it determines the amount of electrical charge that the capacitor can store. Capacitance is measured in Farads, while the capacitor is measured in units of capacitance, such as microfarads (µF) or picofarads (pF).

What is an Example of Capacitance?

One example of capacitance is a common electronic component known as an electrolytic capacitor. These capacitors are used in a wide range of electronic circuits to store electrical energy, filter out noise, and regulate voltage. They consist of two conductive plates separated by a dielectric material, which is usually an electrolyte. The electrolyte allows for a high capacitance, which means that these capacitors can store a large amount of electrical energy.

Another example of capacitance is the human body. Although the capacitance of the human body is relatively small, it can still store a significant amount of electrical charge. This is why people can sometimes feel a shock when they touch a grounded object, such as a metal doorknob or a handrail. The capacitance of the human body is affected by several factors, including the size and shape of the body, as well as the material and proximity of the objects it comes into contact with.

_1497176752.webp)

_1497174704.webp)

_1497153600.webp)